Mysql Fabric高可用集群分片功能测试

作者:网络转载 发布时间:[ 2015/2/9 14:50:27 ] 推荐标签:性能测试 软件测试 进程

c) 查看group_id-1集群组中的数据

mysql -P 3306 -h 192.168.1.76 -u root -e "select * from test.subscribers"

mysql -P 3306 -h 192.168.1.70 -u root -e "select * from test.subscribers"

返回结果:

+--------+------------+-----------+

| sub_no | first_name | last_name |

+--------+------------+-----------+

| 500 | Billy | Joel |

| 1500 | Arthur | Askey |

| 5000 | Billy | Fish |

| 17542 | Bobby | Ball |

| 22 | Billy | Bob |

| 8372 | Banana | Man |

| 93846 | Bill | Ben |

| 15050 | John | Smith |

+--------+------------+-----------+

d) 利用python接口查看数据库test的数据表subscribers分片后的全部数据

python read_table_ha.py

返回结果:

(u'Billy', u'Bob')

(u'Billy', u'Fish')

(u'Billy', u'Joel')

(u'Arthur', u'Askey')

(u'Banana', u'Man')

(u'Billy', u'Fish')

(u'Bill', u'Ben')

(u'Jimmy', u'White')

(u'John', u'Smith')

(u'Bobby', u'Ball')

结论:由以上测试返回数据表明,当HA组(group_id-1)中的master角色崩溃,mysql fabric会自动选举一个slave角色为master,并可正常查询原来的数据,因此当mysql fabric高可用集群中某一台数据库崩溃掉,并不影响数据的完整性

PS:手工恢复FAULT状态的数据库实例

mysql fabric高可用集群中的服务器状态有四种:primary、secondary、faulty、spare

关闭primary,primary不能从组中移除,需要使用mysqlfabric group demote group_id-1来关闭组中的primary,不会重新选举一个新的master,同时也不会关闭故障检测

a) 当其中的一个数据库实例崩溃,可以将这个数据库实例从组中删除,启动这个数据库实例后再重新添加,例如:

mysqlfabric group remove group_id-1 7a45f71d-7934-11e4-9e8c-782bcb74823a

mysqlfabric group add group_id-1 192.168.1.71:3306

mysqlfabric group lookup_servers group_id-1

返回结果:

Command :

{ success = True

return = [{'status': 'SECONDARY', 'server_uuid': '7a45f71d-7934-11e4-9e8c-782bcb74823a', 'mode': 'READ_ONLY', 'weight': 1.0, 'address': '192.168.1.71:3306'}, {'status': 'SECONDARY', 'server_uuid': '9cf162ca-7934-11e4-9e8d-782bcb1b6b98', 'mode': 'READ_ONLY', 'weight': 1.0, 'address': '192.168.1.76:3306'}, {'status': 'PRIMARY', 'server_uuid': 'ae94200b-7932-11e4-9e81-a4badb30e16b', 'mode': 'READ_WRITE', 'weight': 1.0, 'address': '192.168.1.70:3306'}]

activities =

}

b) 当HA组中全部的服务器宕机,实例重启后,fabric的状态不会自动恢复,需要先执行命令:mysqlfabric group demote group_id-1,因为不能直接修改server的状态由faulty为secondary,需要将状态修改为spare,在改为secondary,如下:

mysqlfabric group demote group_id-1

mysqlfabric group deactivate group_id-1

mysqlfabric server set_status 7a45f71d-7934-11e4-9e8c-782bcb74823a spare

mysqlfabric server set_status 7a45f71d-7934-11e4-9e8c-782bcb74823a secondary

###当所有的数据库实例都为secondary状态时,可选举master角色,命令如下:

mysqlfabric group promote group_id-1

二、MySQL Fabric高可用集群存储均衡

1.测试前准备

a) 测试脚本

cat test_add_subs_shards.py

import mysql.connector

from mysql.connector import fabric

import math

def add_subscriber(conn, sub_no, first_name, last_name):

conn.set_property(tables=["test.subscribers"], key=sub_no,

mode=fabric.MODE_READWRITE)

cur = conn.cursor()

cur.execute(

"INSERT INTO subscribers VALUES (%s, %s, %s)",

(sub_no, first_name, last_name)

)

conn = mysql.connector.connect(

fabric={"host" : "localhost", "port" : 32274, "username": "admin",

"password" : "admin"},

user="root", database="test", password="",

autocommit=True

)

conn.set_property(tables=["test.subscribers"], scope=fabric.SCOPE_LOCAL)

for num in range(10):

add_subscriber(conn, "%s" % num, "k%s" % num, "kw%s" % num)

2.开始hash分片测试

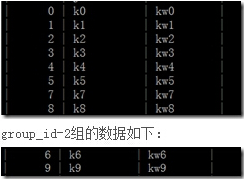

a)插入10条记录,当group_id-1组的三台服务器正常,group_id-2组只有一台服务器正常运作时,

group_id-1组的数据如下:

b) 测试插入1万条记录,group_id-1组和group_id-2组都有三台服务器正常运作

分片后,group_id-1组插入的数据行数如下:

mysql -P 3306 -h 192.168.1.70 -u root -e "select count(*) from test.subscribers"

返回结果:

+----------+

| count(*) |

+----------+

| 7138 |

+----------+

分片后,group_id-2组插入的数据行数如下:

mysql -P 3309 -h 192.168.1.76 -u root -e "select count(*) from test.subscribers"

返回结果:

+----------+

| count(*) |

+----------+

| 2903 |

+----------+

c) 测试插入10万条记录,group_id-1组和group_id-2组都有三台服务器正常运作

分片后,group_id-1组插入的数据行数如下:

mysql -P 3306 -h 192.168.1.70 -u root -e "select count(*) from test.subscribers"

返回结果:

+----------+

| count(*) |

+----------+

| 78719 |

+----------+

mysql -P 3309 -h 192.168.1.76 -u root -e "select count(*) from test.subscribers"

返回结果:

+----------+

| count(*) |

+----------+

| 31321 |

+----------+

d) 测试插入100万条记录,group_id-1组和group_id-2组都有三台服务器正常运作

相关推荐

更新发布

功能测试和接口测试的区别

2023/3/23 14:23:39如何写好测试用例文档

2023/3/22 16:17:39常用的选择回归测试的方式有哪些?

2022/6/14 16:14:27测试流程中需要重点把关几个过程?

2021/10/18 15:37:44性能测试的七种方法

2021/9/17 15:19:29全链路压测优化思路

2021/9/14 15:42:25性能测试流程浅谈

2021/5/28 17:25:47常见的APP性能测试指标

2021/5/8 17:01:11

sales@spasvo.com

sales@spasvo.com